In this article, you’ll learn about what Loras is, why so much craze about Lora, and how to train Lora offline?

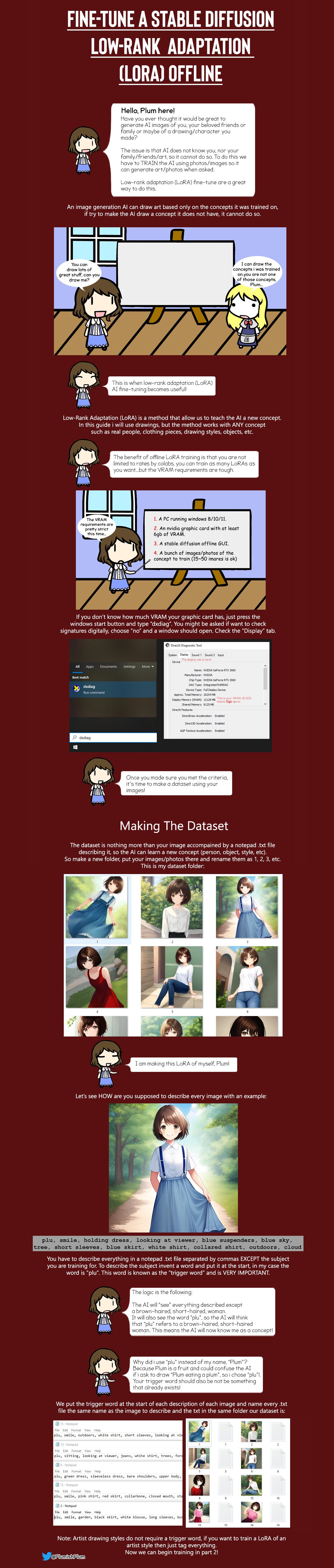

This tutorial is for beginners who haven’t used LoRA models before. We have also shared an infographic on the Stable Diffusion Lora Training Guide.

Training LoRAs is like cooking food. You gather the best ingredients (training images) and follow the right steps (settings), but once you start training, you can only hope that everything goes well.

You won’t know if it’s successful until it’s done, although you can check on its progress.

Table Of Contents 👉

What Is LoRA In Stable Diffusion?

LoRA, or Low-Rank Adaptation, is a method for making Stable Diffusion models better at specific tasks, like creating characters or styles.

These models act like a link between big model files and turning text into something else. They give you a good mix of smaller file sizes and strong training abilities.

Why LoRA Is Special?

But why is LoRA special when we already have training techniques like Dreambooth and textual inversion? LoRA offers a good balance between file size and training power.

Dreambooth is powerful but results in large model files (2-7 GBs). Textual inversions are small (about 100 KBs), but they have limitations.

LoRA falls in between. Its file size is more manageable (2-200 MBs), and it provides good training power.

People who use Stable Diffusion models know that their local storage quickly fills up because of their large size. It’s hard to maintain a collection on a personal computer. LoRA is an excellent solution to this storage problem.

Similar to textual inversion, you can’t use a LoRA model alone. It needs to be used with a model checkpoint file. LoRA modifies styles by making small changes to the accompanying model file.

LoRA is a great way to customize AI art models without taking up too much storage space.

LoRA applies small changes to the most important part of Stable Diffusion models: the cross-attention layers. These layers are where the image and the prompt come together.

Researchers have found that fine-tuning this part of the model is sufficient for good training. The cross-attention layers are highlighted in yellow in the Stable Diffusion model architecture.

The weights of a cross-attention layer are organized in matrices, which are like tables with rows and columns. A LoRA model fine-tunes a model by adding its weights to these matrices.

How LoRA Works In Stable Diffusion?

How can LoRA model files be smaller if they still need to store the same number of weights? The trick of LoRA is to break down a matrix into two smaller matrices, called low-rank matrices. By doing this, it needs to store fewer numbers. Let’s illustrate this with an example.

Imagine the model has a big grid with 1,000 rows and 2,000 columns, which is like storing 2 million numbers. LoRA does something clever.

It splits the grid into two smaller ones, which only need 6,000 numbers to store (1,000 x 2 + 2 x 2,000), 333 times less. This makes LoRA files much smaller.

The trick involves using smaller grids with a rank of 2 (a special property), but it doesn’t hurt the model’s performance during fine-tuning.

This was a basic explanation of how Stable Diffusion LoRA works. Now, let’s deep dive into the offline training of Lora Stable Diffusion.

Lora Stable Diffusion Training Guide -Infographic

In conclusion, LoRA models offer a great chance to expand and improve Stable Diffusion models without dealing with big file sizes.

If you know how to install and use them correctly, you can add various styles and effects to your AI-generated images.

Just remember, the key to using LoRA models successfully is to understand what each model needs, adjust the settings accordingly, and try out different combinations of models and embeddings.

Hope you find this Stable Diffusion Lora Training Guide helpful. One more thing i.e. the credits of the images used in this article goes to Plumish Plum. Have fun creating!

Related Stories: