A new study by Stanford HAI (Human-Centered Artificial Intelligence) has found that big AI companies like OpenAI, Stability, Google, Anthropic, Big Science, Meta, and others aren’t giving enough information about how their AI models affect human lives.

Stanford HAI just released a report called the Foundation Model Transparency Index. This report checked whether the creators of the ten most popular AI models share information about their work and how people use their systems.

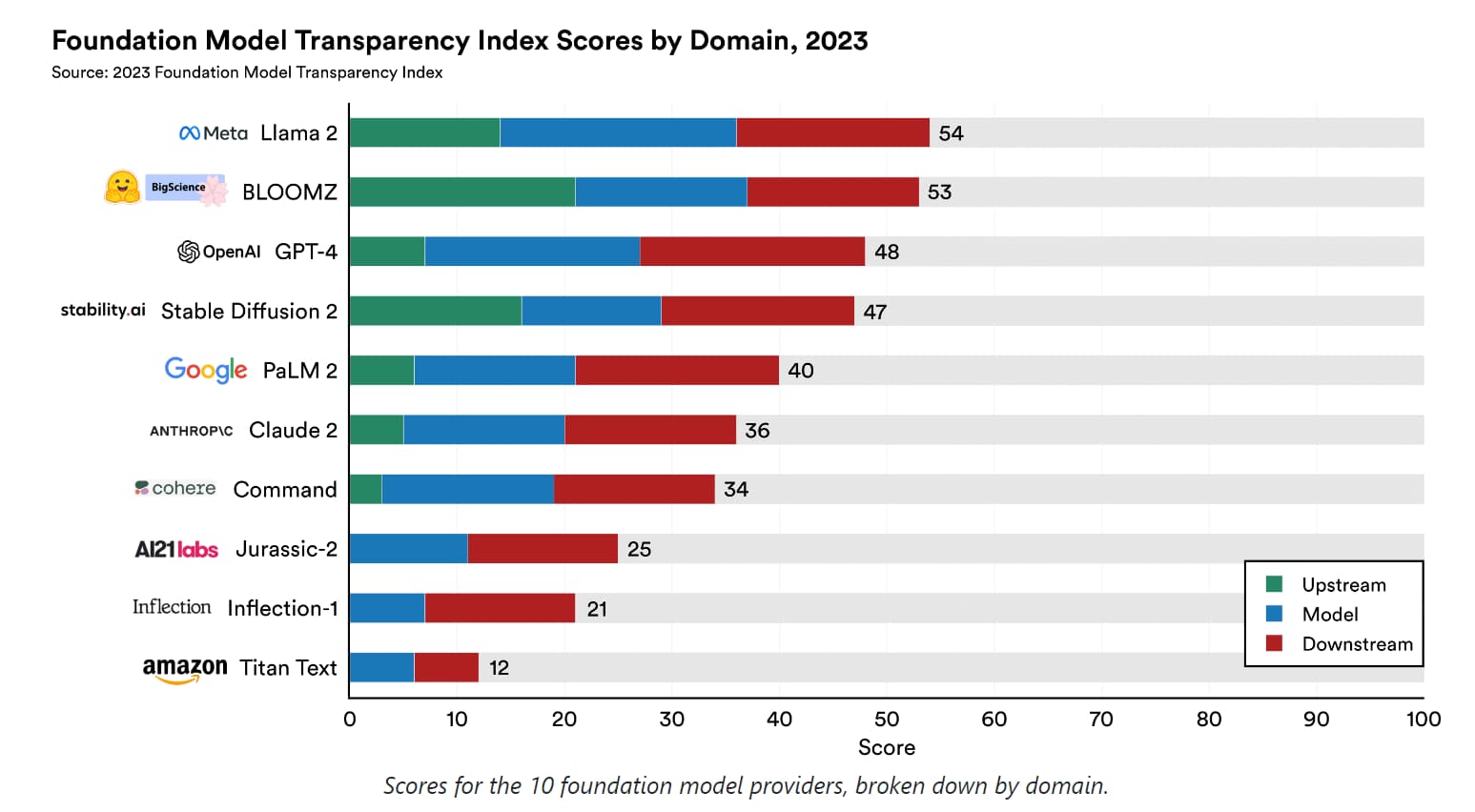

Among the models they tested, Meta’s Llama 2 was the best at sharing info, followed by BloomZ and OpenAI’s GPT-4. But none of them got very high scores.

They also looked at other models, like Stability’s Stable Diffusion, Anthropic’s Claude, Google’s PaLM 2, Command from Cohere, AI21 Labs’ Jurassic 2, Inflection-1 from Inflection, and Amazon’s Titan.

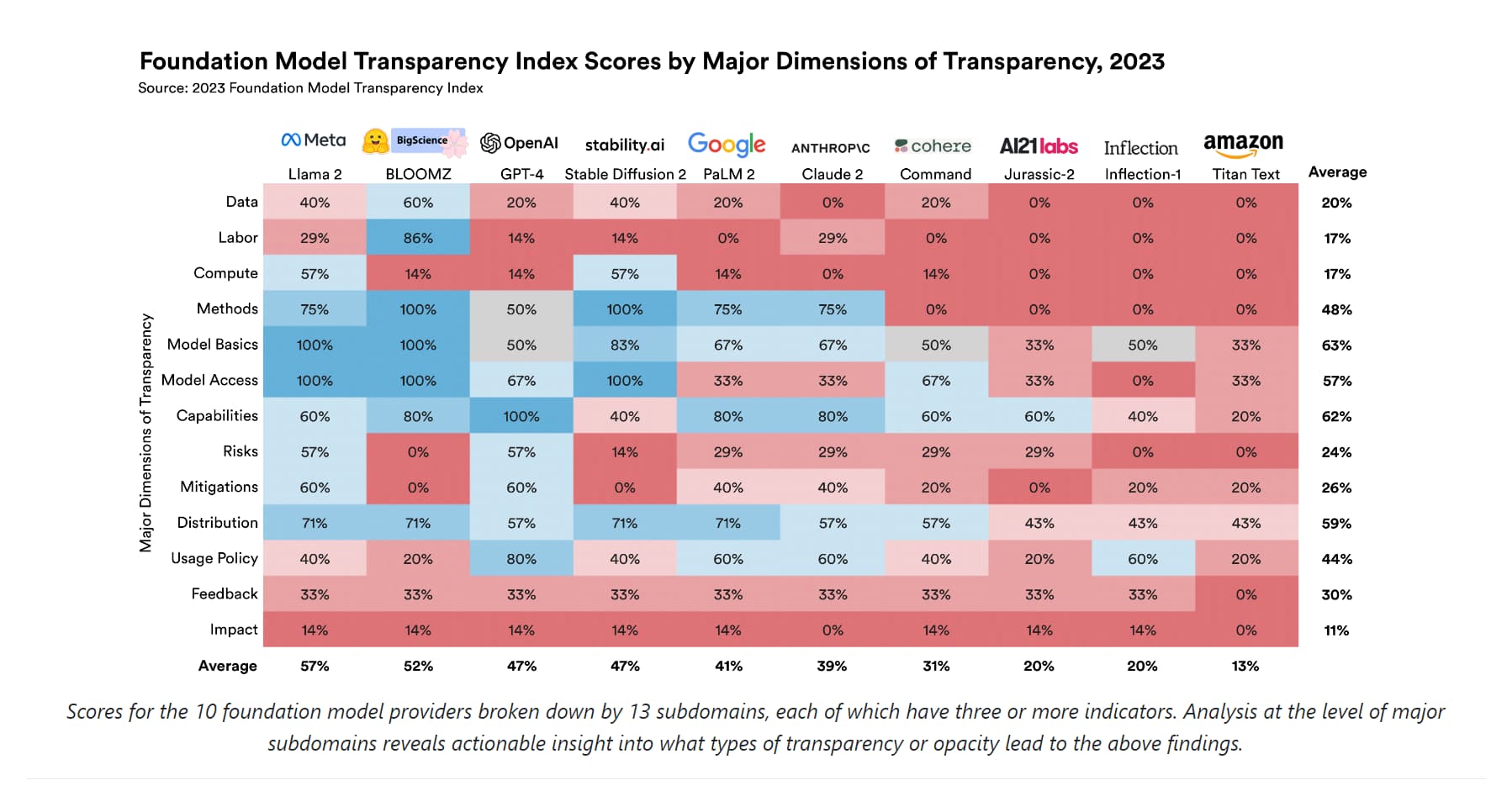

The researchers said that being “transparent” is a broad idea. To measure it, they looked at 100 things like how the models are made, how they work, and how they’re used.

They also checked if the companies told people about their partners and third-party developers if they let people know if they use private information, and other stuff.

Meta got 54%, BloomZ got 53%, and GPT-4 got 48%. Even though OpenAI doesn’t share much research or where they get their data, GPT-4 got a good score because there’s lots of information about its partners.

OpenAI works with many different companies, and they use GPT-4 in their products, so there’s a lot of public information.

None of the companies said anything about how their models affect society, like privacy, copyrights, or unfair treatment.

Rishi Bommasani, who works at the Stanford Center for Research on Foundation Models and helped with the study, said that the goal of the report is to set a standard for governments and companies. Some new rules, like the EU’s AI Act, might make big AI companies share more information.

Bommasani explained, “Our aim with this report is to make these models more open. We want to turn this vague idea of being ‘transparent’ into specific things we can measure. We looked at one model from each company to make it easier to compare.”

Some AI models have a big community of people who like to share and talk about them, but some of the biggest companies in AI don’t share their research or code. OpenAI, for example, doesn’t share its research anymore because it wants to stay competitive and safe.

Bommasani said that they might add more models to the report in the future, but for now, they are focusing on the ten models they’ve already checked.

Related Stories: