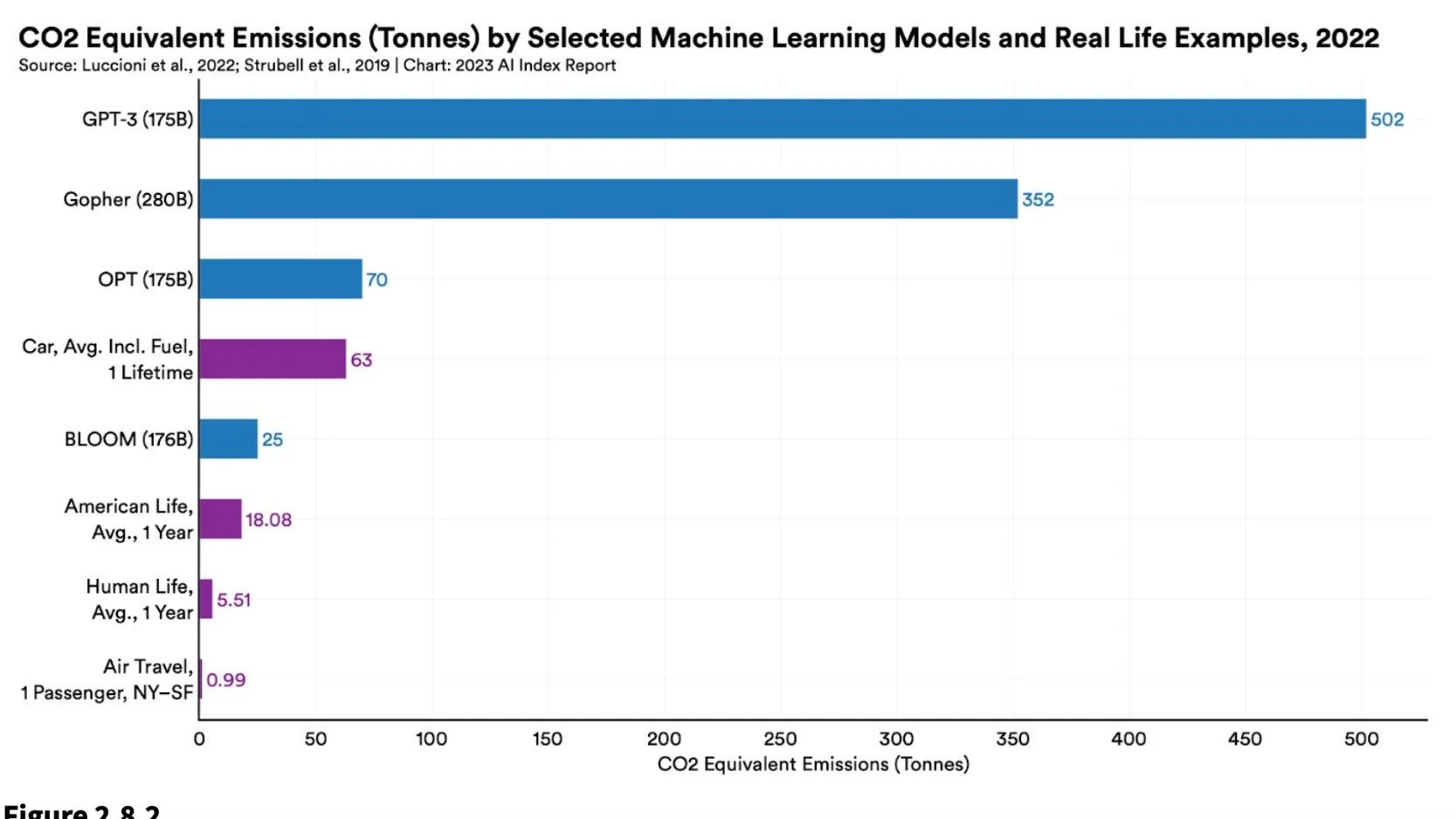

According to a new report from the Stanford Institute for Human-Centered Artificial Intelligence, the energy required to train AI models such as OpenAI’s GPT-3, which drives ChatGPT, is estimated to be enough to power an average American home for centuries. Among the three AI models examined in the study, OpenAI’s system was by far the most energy-intensive.

The study, which was highlighted in the recently published Artificial Intelligence Index from Stanford, draws on recent research that measures the carbon footprint of training four models: DeepMind’s Gopher, BLOOM from BigScience initiatives, OPT from Meta, and OpenAI’s GPT-3.

During its training, OpenAI’s model emitted 502 metric tons of carbon. To put this into perspective, this is 1.4 times more carbon than Gopher and a staggering 20.1 times more than BLOOM.

The energy consumption of each model was determined by a range of factors, including the number of data points or parameters they were trained on and the efficiency of the data centers in which they are housed. While there were significant differences in energy consumption, three of the four models, except for DeepMind’s Gopher, were trained on roughly equivalent to 175 billion parameters.

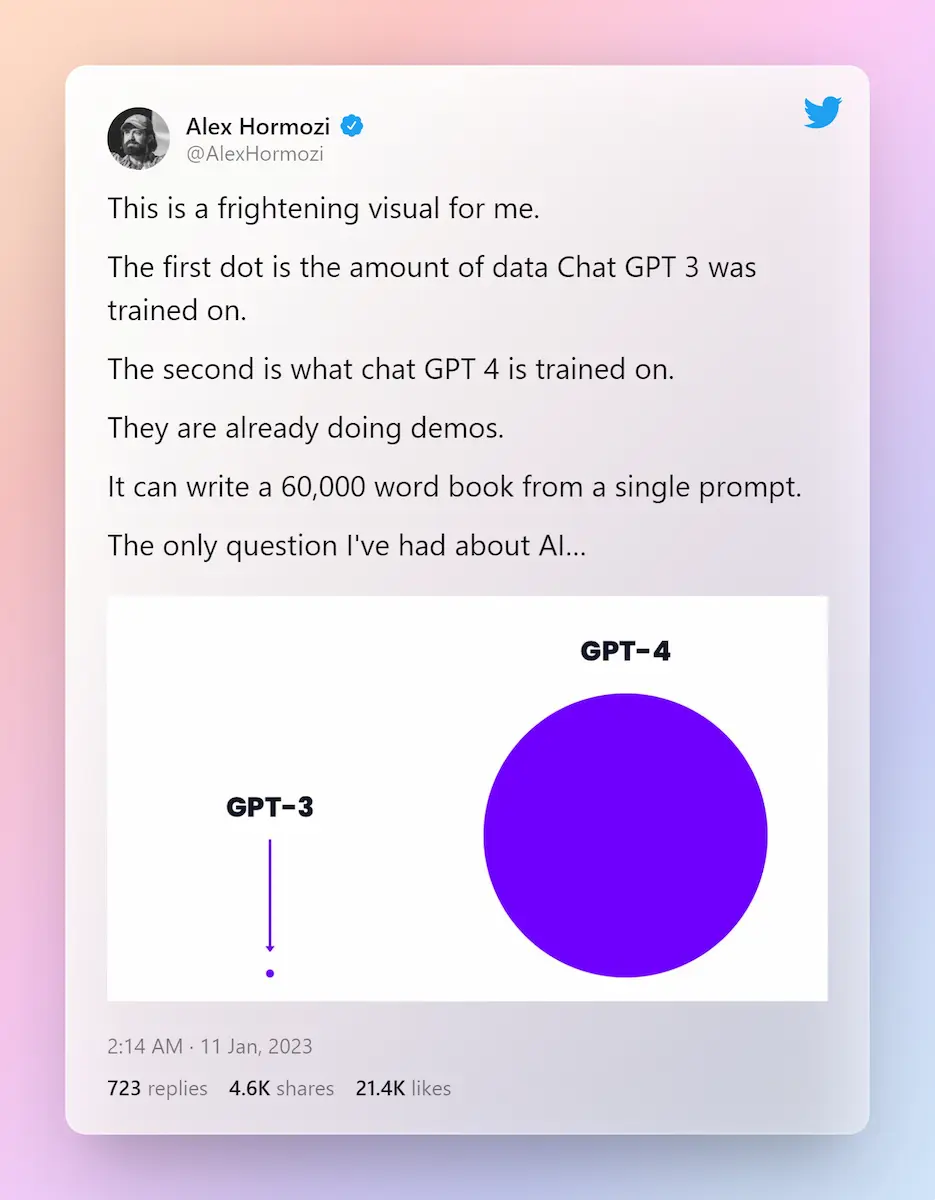

OpenAI has not disclosed how many parameters its newly released GPT-4 is trained on, but it is believed to require even more data than its predecessors due to the significant increase in data required between versions. One AI enthusiast estimated that GPT-4 could be trained on 100 trillion parameters, but OpenAI CEO Sam Altman has dismissed this claim as “complete bullshit.”

Last year, Stanford researcher Peter Henderson warned that if AI models continue to scale without considering environmental impacts, it could do more harm than good. He emphasized the need to mitigate this impact and prioritize net social good. However, the report suggests that it may be premature to conclude that data-hungry AI models are an environmental disaster.

These powerful AI models could be leveraged to optimize energy consumption in data centers and other environments. For example, in a three-month experiment, DeepMind’s BCOOLER agent achieved approximately 12.7% energy savings in a Google data center while maintaining a comfortable working environment for people.

Table Of Contents 👉

AI’s Environmental Impact: The Brother Of Crypto Climate Crisis?

The current environmental concerns surrounding large language models (LLMs) are reminiscent of the criticisms against the cryptocurrency industry a few years ago. In the case of crypto, Bitcoin was widely recognized as the primary culprit of the industry’s significant environmental impact, owing to the massive energy consumption involved in mining coins through its proof-of-work model. Some estimates indicate that Bitcoin alone consumes more energy annually than Norway’s total electricity consumption.

However, after years of criticism from environmental activists, the crypto industry has undergone some changes. Ethereum, the second-largest currency on the blockchain, officially transitioned to a proof-of-stake model last year, which proponents claim could reduce power usage by more than 99%. Other smaller coins were also designed with energy efficiency in mind. While LLMs are still in their early stages, it remains uncertain how they will fare on their environmental report card.

In addition to their energy requirements, the price tags for LLMs are also skyrocketing. When OpenAI released its GPT-2 model in 2019, the company spent just $50,000 to train it on 1.5 billion parameters. However, Google’s PaLM model, trained on 540 billion parameters, cost $8 million to develop just three years later. According to the report, PaLM was approximately 360 times larger than GPT-2 but cost 160 times more. These models, whether from OpenAI or Google, are only growing in size.

The report highlights that large language and multimodal models are becoming increasingly more expensive. For example, OpenAI’s GPT-2, built on 1.5 billion parameters and released in 2019, cost $50,000 to train. However, Google’s PaLM model, trained on 540 billion parameters and released in 2022, cost $8 million to train. These models are only getting larger, and their price tags are increasing accordingly.

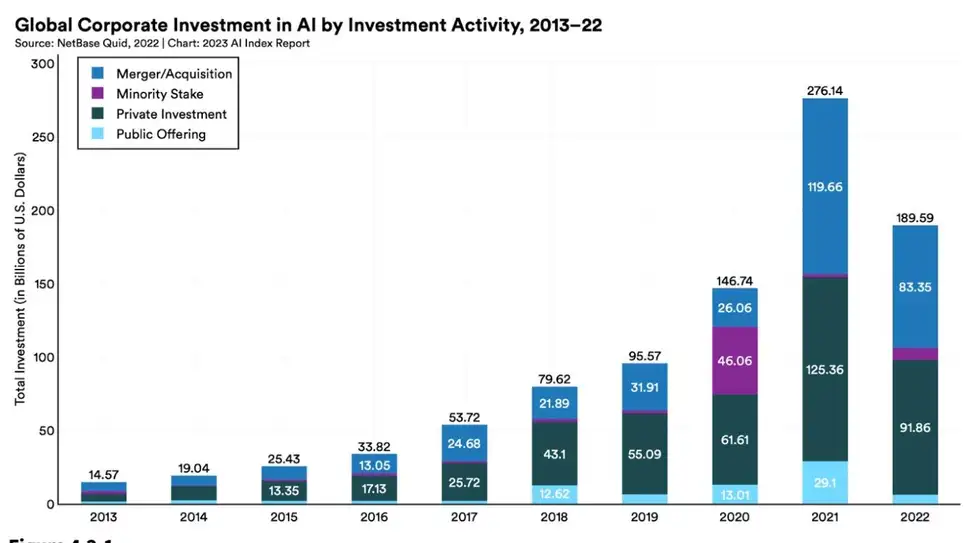

This trend in investment in AI is also having carry-over effects in the wider economy. According to Stanford, the amount of private investment in AI globally in 2022 was 18 times larger than in 2013. Job postings related to AI are also increasing across every sector in the US. The US remains unmatched in terms of overall investment in AI, having reportedly invested $47.4 billion into AI tech in 2022, 3.5 times more than China, the next largest spender.

What Lawmakers Are Doing In Case Of ChatGPT?

Lawmakers are gradually becoming more aware of the recent wave of powerful chatbots and the ethical and legal questions surrounding them.

The Stanford report notes that in 2021, only 2% of all federal bills related to AI passed into law, but that number increased to 10% in 2022. Many of these bills were written before the current concerns surrounding GPT4 and premature descriptions of it as “artificial general intelligence.”

In 2022, Stanford identified 110 AI-related legal cases brought in US federal and state courts, 6.5 times more than in 2016. Most of these cases occurred in California, Illinois, and New York, with 29% involving civil law and 19% concerning intellectual property rights. With recent complaints from writers and artists about AI generators’ use of their style, the portion of property rights cases could increase.

Related Stories: