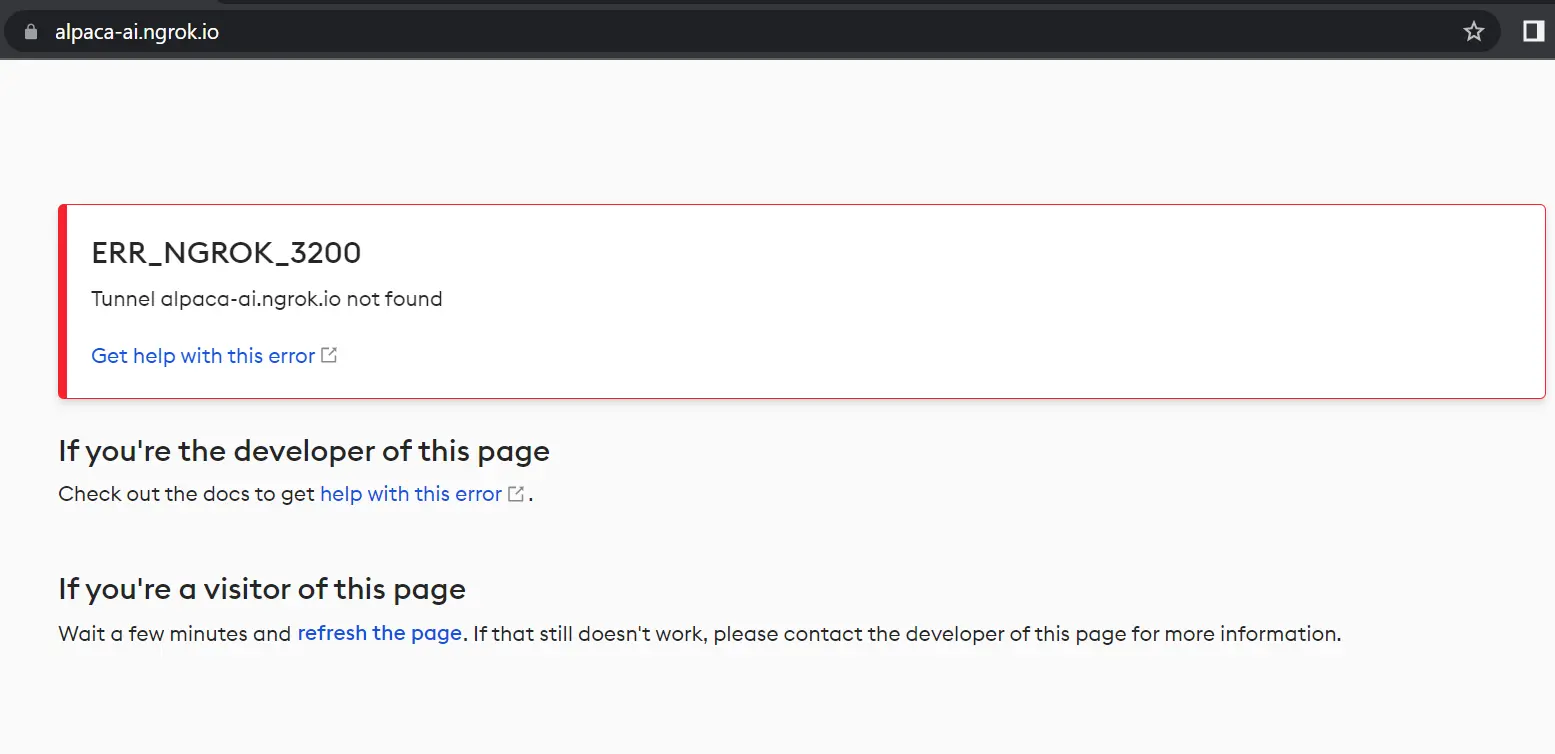

According to reports, Stanford researchers have taken their AI, Alpaca, offline shortly after revealing a demo. The clone of ChatGPT was removed due to concerns about safety and costs. A spokesperson for Stanford’s Human-Centered Artificial Intelligence institute explained that the primary goal of releasing the demo was to make their research more accessible. However, with the hosting costs and inadequate content filters, the institute decided to take down the demo after achieving its goal.

While the decision to remove Alpaca was influenced by practical concerns, such as hosting costs, safety, etc. Alpaca was created by fine-tuning Meta’s existing LLaMa 7B large language model on a budget of only $600. The main aim was to showcase how affordable it can be to match the capabilities of tech giants such as OpenAI and Google. However, the maintenance of Alpaca AI proved to be more costly than expected.

Alpaca, the AI model developed to mimic its inspiration, might have copied its undesirable traits a little too far. One of these traits is the tendency to spout misinformation, which the AI industry calls “hallucination” – a misfiring of LLMs.

The researchers were unsurprised by this outcome, as they had already acknowledged Alpaca’s shortcomings in their announcement. They stated that Alpaca has a common failure mode, particularly in terms of hallucination, which is more frequent than in GPT-3.5.

One of the obvious failures of Alpaca is providing incorrect information about the capital of Tanzania. Additionally, it churns out convincingly written false information about why the number 42 is the optimal seed for training AIs without any resistance.

The researchers did not provide full details on how Alpaca may have deviated and taken liberties with the truth when it became accessible to the public. However, based on ChatGPT’s own mistakes, it is not difficult to envision what it might have been like or how deadly the problem might have been.

Despite its imperfections, Alpaca’s code remains accessible on GitHub for those who want to expand on the discoveries made by Stanford researchers. Regardless of its shortcomings, the creation of Alpaca is still considered a success in the realm of low-budget AI engineering, whether that success is beneficial or not.

The researchers stated in the release that they encourage users to help them discover new forms of failure by reporting them in the web demo. They also hope that the release of Alpaca will enable further exploration into instruction-following models and their alignment with human values.

Related Stories: