Meta has unveiled Llama 3, the latest version of its LLM (Large Language Model) on Microsoft Azure and for other users.

The Meta AI assistant now integrates real-time search results from both Bing and Google, choosing which search engine to use based on the prompt.

It has upgraded its image generation to produce animations (GIFs) and high-resolution images on the fly as you type.

A panel of prompt suggestions inspired by Perplexity appears when you open a chat window to clarify the capabilities of the chatbot.

Meta AI, previously available only in the US, is expanding to English-speaking countries including Australia, Canada, Ghana, Jamaica, Malawi, New Zealand, Nigeria, Pakistan, Singapore, South Africa, Uganda, Zambia, and Zimbabwe.

This expansion marks a step towards Meta’s goal of reaching its over 3 billion daily users globally, although it falls short of the initial vision of a fully global AI assistant.

Zuckerberg views Meta’s extensive reach and adaptability as its competitive advantage, evident in his strategy for Meta AI. By widely integrating it and investing heavily in foundational models, he aims to replicate the success Meta has achieved.

Meta AI is not currently top of mind for many when considering popular AI assistants, according to a statement from an expert in the field.

However, they anticipate a significant shift, expecting Meta AI to become a prominent product as it gains more exposure and integration.

Everything About Meta’s Llama 3

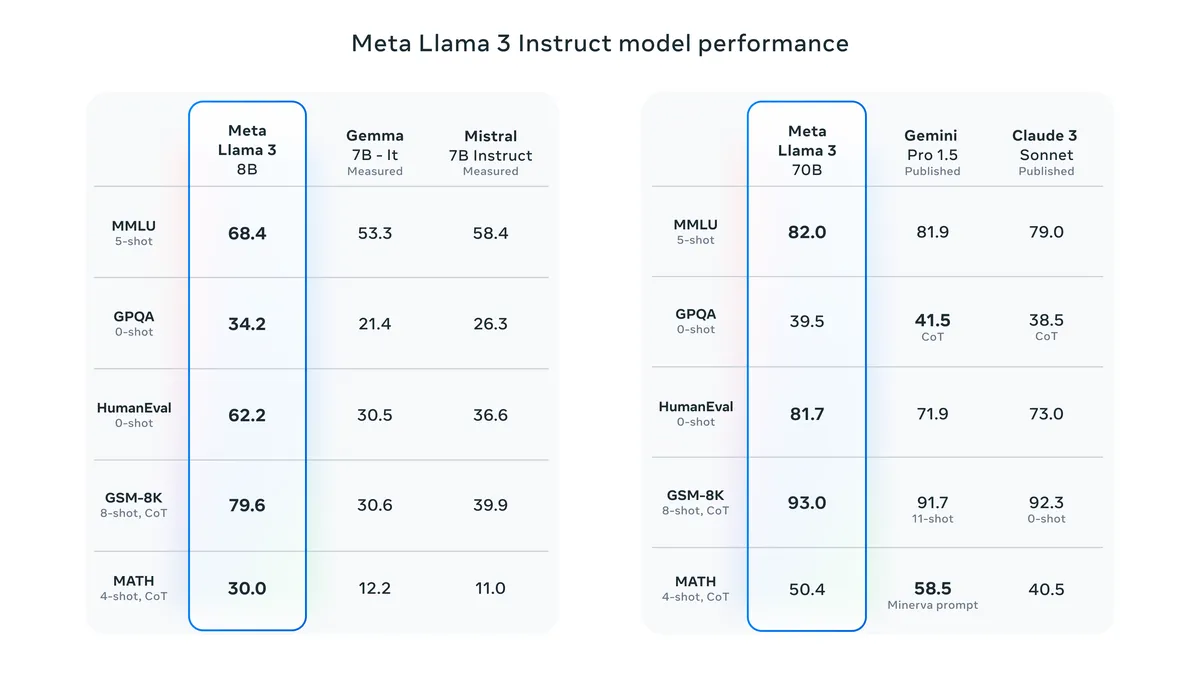

Meta is releasing two open-source Llama 3 models for external developers. These models include an 8-billion parameter version and a larger 70-billion parameter version. Both models will be available on major cloud platforms for free use.

Llama 3 showcases the rapid advancement of AI models, demonstrated by its significant scaling.

Compared to the largest version of Llama 2, Llama 3 will have over 400 billion parameters, a substantial increase from 70 billion.

Llama 2 trained on 2 trillion tokens, while the large version of Llama 3 surpasses 15 trillion tokens. OpenAI hasn’t officially disclosed the parameters or tokens in GPT-4 yet.

Llama 3 aimed to significantly reduce false refusals, instances where the model declines to respond to prompts that are harmless. Zuckerberg illustrates this with examples like asking the model to make a “killer margarita” or to advise on breaking up with someone.

Meta is deliberating whether to open source the 400-billion-parameter version of Llama 3, still in training.

Zuckerberg suggests safety concerns won’t hinder open sourcing, as the risks aren’t comparable to other potential dangers in the field. He expresses confidence in the eventual open-sourcing of the project.

Zuckerberg hints at forthcoming updates for Llama 3, focusing on iterative improvements for smaller models, such as extended context windows and increased multimodality.

The specifics of the multimodality feature remain undisclosed, suggesting it won’t include video generation similar to OpenAI’s Sora for now.

Meta aims for enhanced personalization of its assistant, potentially allowing it to create images resembling users in the future.

Meta is vague about the specifics of the data used to train Llama 3. The training dataset for Llama 3 is seven times larger than Llama 2’s and contains four times more code.

Despite Zuckerberg’s claim about Meta’s user data size, no Meta user data was utilized in training Llama 3.

Llama 3 incorporates a combination of “public” internet data and synthetic AI-generated data.

Interestingly, AI technology is employed in the creation of AI through Llama 3’s training process.

Related Stories: