Despite attempts to filter out such harmful material, Google’s large language models may be trained using inappropriate, r@cist, and p0rnographic web content. The Allen Institute for AI and The Washington Post investigated Google’s C4 dataset, released for academic research, to gain insight into the types of websites typically scraped for training large language models.

Note: Some of the top websites in multiple categories are given at the end of this article.

Google’s T5 Text-to-Text Transfer Transformer and Facebook’s Large Language Model Meta AI (LLaMA), a variant that caused concern, were trained using the C4 dataset.

The ingestion of inappropriate material into the C4 dataset could potentially result in the creation of next-gen machine-learning systems that behave inappropriately and unreliably.

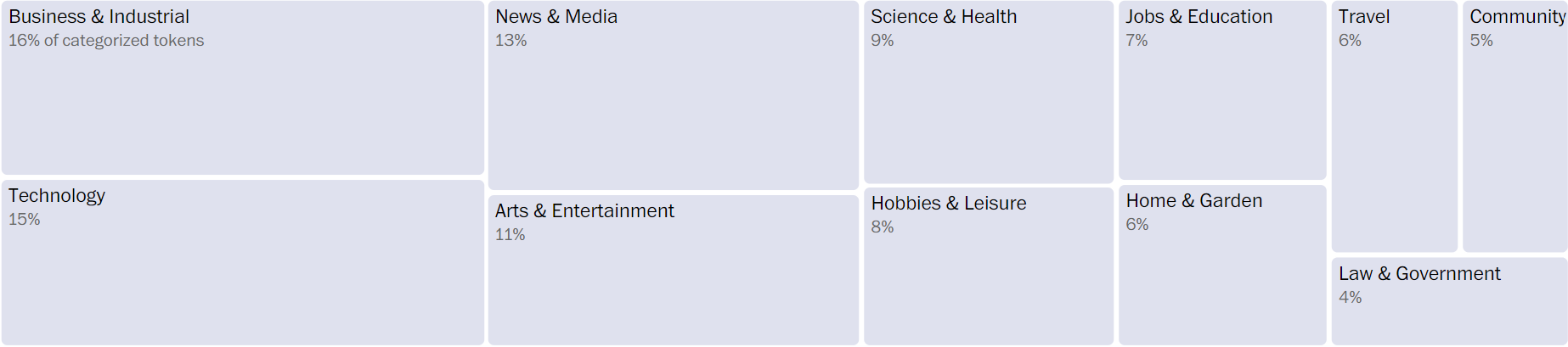

The Allen Institute and The Washington Post analyzed C4 by identifying and ranking the top 10 million websites included in the dataset based on matching text found on the internet. Despite being a more refined version of the Common Crawl dataset, which contains text from billions of websites, C4 still included undesirable material sourced from the dark craters of the internet.

Scraped content from websites like Stormfront, a platform for race hate, Kiwi Farms, a forum for doxing, and 4chan, a toxic message board that contained racist, anti-trans, and toxic text. As a result, it is not surprising that language models trained on this corpus may generate inappropriate content, discuss conspiracy theories, or unite suspicious ideologies.

In addition to containing inappropriate material, C4 also includes websites that host varying degrees of personal information, including voter registration databases.

Meanwhile, regulatory agencies in Canada, France, Italy, and Spain have launched investigations into OpenAI’s ChatGPT over data privacy concerns, as the model has the ability to ingest and generate sensitive information.

Large language models powering AI chatbots are not sentient or conscious, regardless of their impressive capabilities. Rather, they generate text by predicting the flow of words and sentences in response to user prompts, questions, or instructions. This process involves utilizing the vast quantities of data they have been trained on, learning from it, and attempting to emulate human writing.

As a result, the predictions these models make reflect patterns found in the various types of text humans produce, such as internet posts, news articles, poetry, and novels, which are compiled into extensive training datasets.

These systems cannot differentiate between fact and fiction, consume vast amounts of data scraped from the internet, and can produce inaccurate outcomes or simply reiterate information.

Although companies developing large language models strive to exclude undesirable content during both the training and inference stages, their review processes are not always perfect. Furthermore, commercial AI models developers, such as Microsoft’s Bing, OpenAI’s ChatGPT, or Google’s Bard chat, do not consistently disclose their methods for sourcing, cleaning, and processing training data.

Google used extensive filtering on the data before feeding it into the AI, as is standard practice for most companies. The dataset, known as C4 or Colossal Clean Crawled Corpus, underwent the removal of duplicate and nonsensical text.

To eliminate unsuitable content, Google utilized the open-source “List of Dirty, Naughty, Obscene, and Otherwise Bad Words,” which includes 402 English terms and one emoji symbol (a hand making a common but nasty gesture). Typically, companies employ high-quality datasets to optimize model performance and prevent users from being exposed to undesirable content.

Despite its purpose of reducing exposure to obscenities and racial slurs during training, such blocklists have also been shown to remove non-sexual LGBTQ content. Previous research has indicated that many inappropriate instances still make it through the filters. For example, this investigation revealed hundreds of p0rnographic websites and over 72,000 mentions of “swastika,” which is one of the prohibited terms on the list.

The dataset includes copyrighted material, with the © symbol appearing over 200 million times, and it remains uncertain whether companies utilizing training data containing protected works are liable for infringing intellectual property.

While C4 contains content from the internet up until 2019, similar data collection practices were employed to create other, more recent models, and this research brings to light the problematic results that AI chatbots may produce.

Miscellaneous Sites:

- wowhead.com

- dumpsteroid.com

- coloradovoters.info

- flvoters.com

- stormfront.org

- kiwifarms.net

- 4chan.org

- b-ok.org

- threepercentpatriots.com

Some Of The Top Business & Industrial Sites Used To Train LLMs:

- fool.com

- kickstarter.com

- sec.gov

- marketwired.com

- city-data.com

- myemail.constantcontact.com

- finance.yahoo.com

- prweb.com

- entrepreneur.com

- globalresearch.ca

Some Of The Top News Sites Used To Train LLMs:

- nytimes.com

- latimes.com

- theguardian.com

- forbes.com

- huffpost.com

- washingtonpost.com

- businessinsider.com

- chicagotribune.com

- theatlantic.com

- aljazeera.com

Some Of The Top Religious Sites Used To Train LLMs:

- patheos.com

- gty.org

- jewishworldreview.com

- thekingdomcollective.com

- biblehub.com

- liveprayer.com

- lds.org

- wacriswell.com

- wdtprs.com

- bibleforums.org

Some Of The Top Technology Sites Used To Train LLMs:

- instructables.com

- ipfs.io

- docs.microsoft.com

- forums.macrumors.com

- medium.com

- makeuseof.com

- sites.google.com

- slideshare.net

- s3.amazonaws.com

- pcworld.com