Researchers from University College London have recently published a study in the Crime Science journal. The study ranked various ways artificial intelligence (AI) could potentially aid criminal activities over the next 15 years.

Contrary to the portrayal of robots overpowering humanity in Hollywood movies, the study suggests that deepfakes pose the greatest threat. Deepfakes refer to multimedia content edited/created using AI to make the subject appear to be doing or saying something they have not actually done or said.

The study was conducted through a workshop involving a threat assessment exercise by a diverse group of stakeholders–security, academia, public policy, and the private sector.

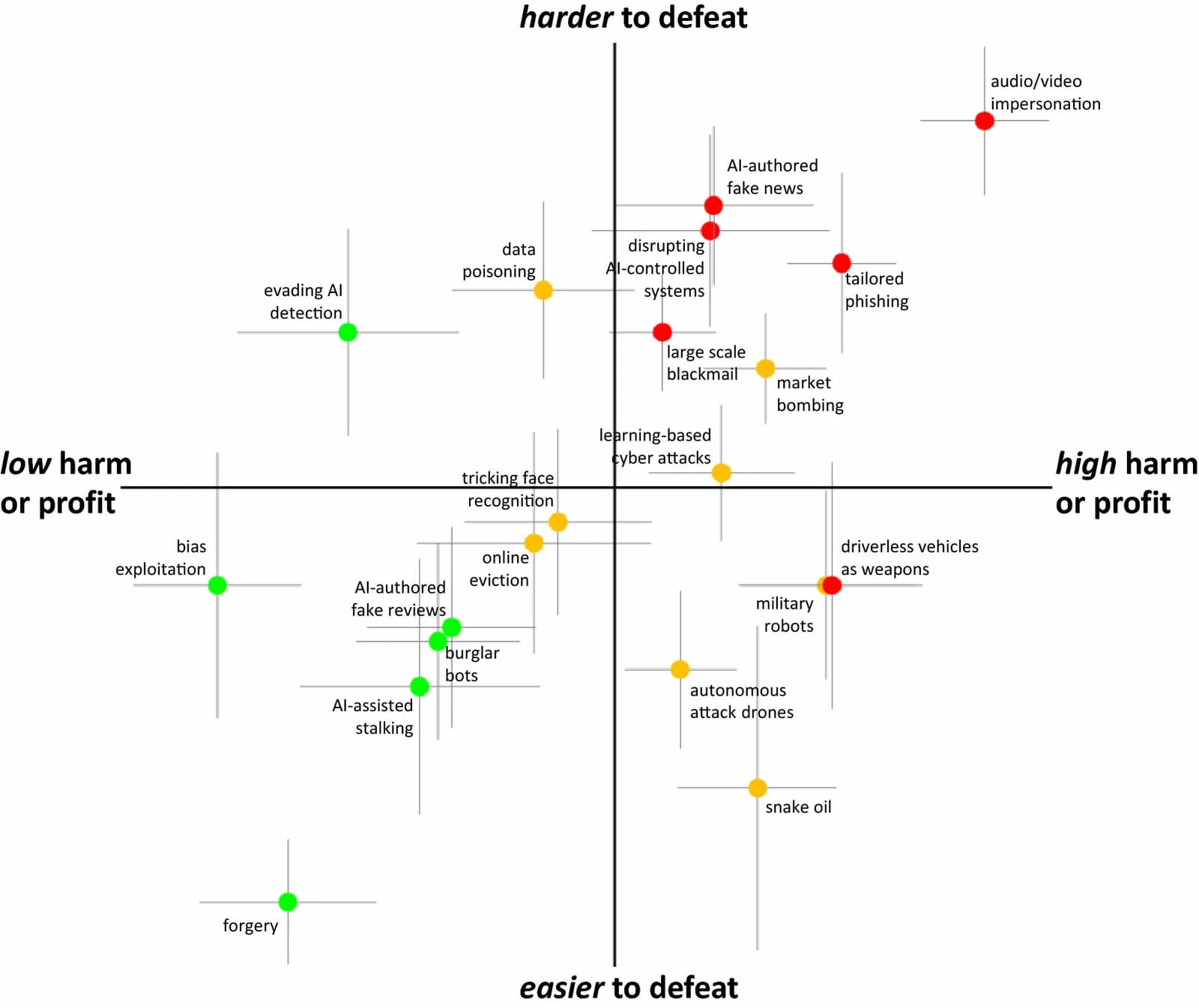

The crimes were presented to them, and they were asked to evaluate them according to the following aspects of threat severity: harm (the victim or the social harm), criminal profit (the realization of a criminal goal), achievability (how possible the crime would be), and defeatability (how easy or difficult it is to defeat).

These crimes included crimes we are familiar with, such as forgery, but also newer crimes, like tricking face recognition, using driverless vehicles as weapons, and using military robots.

The group compared 18 types of AI threats and found that deepfakes were the most dangerous. They are a threat to trust, which is fundamental for our survival as humans.

According to the study’s authors, “Humans are inclined to believe what they see and hear.” Recent developments in deep learning have greatly increased the possibility of creating fake content.

Technology that was largely used for creating non-consensual p0rn has now begun to penetrate politics. These manipulations can have a wide range of potential consequences. They could lead to individuals scamming their family members, creating videos to create distrust in the public eye, and even blackmailing material using audio and video manipulations. Therefore, these attacks can be difficult to spot and are hard to stop.

According to the authors, citizen behavior changes, such as distrusting visual evidence, might be their best defense. It is much easier to discredit real evidence if even a fraction of the visual evidence is convincing. While behavioral changes may be necessary, they could be considered indirect social harm from the threat posed by a deep fake.

Other top threats included:

- Autonomous cars are being used to weaponize vehicular terrorist attacks remotely.

- Artificial intelligence-generated fake news.

- Targeted phishing.

- Military robots.

- Unsurprisingly, the lowest-ranked threats were forgery, stalking, and burglar bots.

The study was funded by Professor Shane Johnson, Director of UCL’s Dawes Centre for Future Crimes. Johnson stated, “We live in a constantly changing world, which creates new opportunities, good and bad.” It is crucial that we anticipate future threats to crime so that policymakers, and other stakeholders who can act, can take action before they become a problem.

Related Stories: