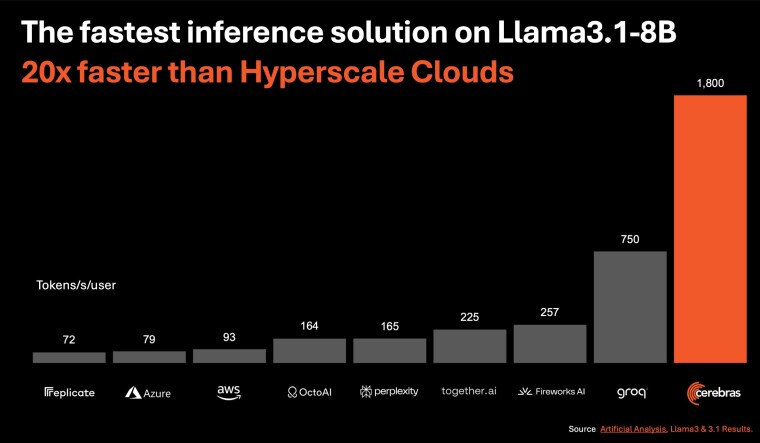

Cerebras Systems unveiled Cerebras Inference, touted as the world’s fastest AI inference solution. This new offering delivers 1,800 tokens per second for Llama 3.1 8B and 450 tokens per second for Llama 3.1 70B, nearly 20 times faster than NVIDIA GPU-based AI inference solutions available in hyper-scale clouds, including Microsoft Azure.

Additionally, this new inference solution is priced at a fraction of popular GPU clouds, with one million tokens costing just 10 cents, providing a 100x higher price performance for AI workloads.

“Cerebras’ 16-bit accuracy and 20x faster inference capabilities enable AI app developers to create next-generation AI applications without sacrificing speed or cost.

This price to performance ratio is achieved through the Cerebras CS-3 system, powered by the Wafer Scale Engine 3 (WSE-3) AI processor.

The CS-3 offers 7,000 times more memory bandwidth than the Nvidia H100, effectively addressing Generative AI’s memory bandwidth challenges.”

Cerebras Inference is available in three plans: the Free Tier offers free API access and generous usage limits; the Developer Tier provides an affordable API endpoint with Llama 3.1 8B and 70B models priced at 10 cents and 60 cents per million tokens, respectively; and the Enterprise Tier offers fine-tuned models, custom service level agreements, and dedicated support, accessible via a Cerebras-managed private cloud or on customer premises.

Cerebras team stated the following about the Cerebras Inference launch:

“With record-breaking performance, industry-leading pricing, and open API access, Cerebras Inference sets a new standard for open LLM development and deployment. As the only solution capable of delivering both high-speed training and inference, Cerebras opens entirely new capabilities for AI.”

Stories You May Like