There are a lot of steps here. But don’t treat this as an exhaustive list. We’ve only added steps for the most common steps you’ll come across while building a machine learning project.

The number one thing to remember is: that machine learning is experimental. Try something, and see if it works, if not, try something else or modify it and repeat the process.

Your primary goal for machine learning problems should be reducing the time between your experiments.

This article is suitable for those who are searching for “how to start a machine learning project” and what are the steps to build your “first machine learning project”.

Table Of Contents 👉

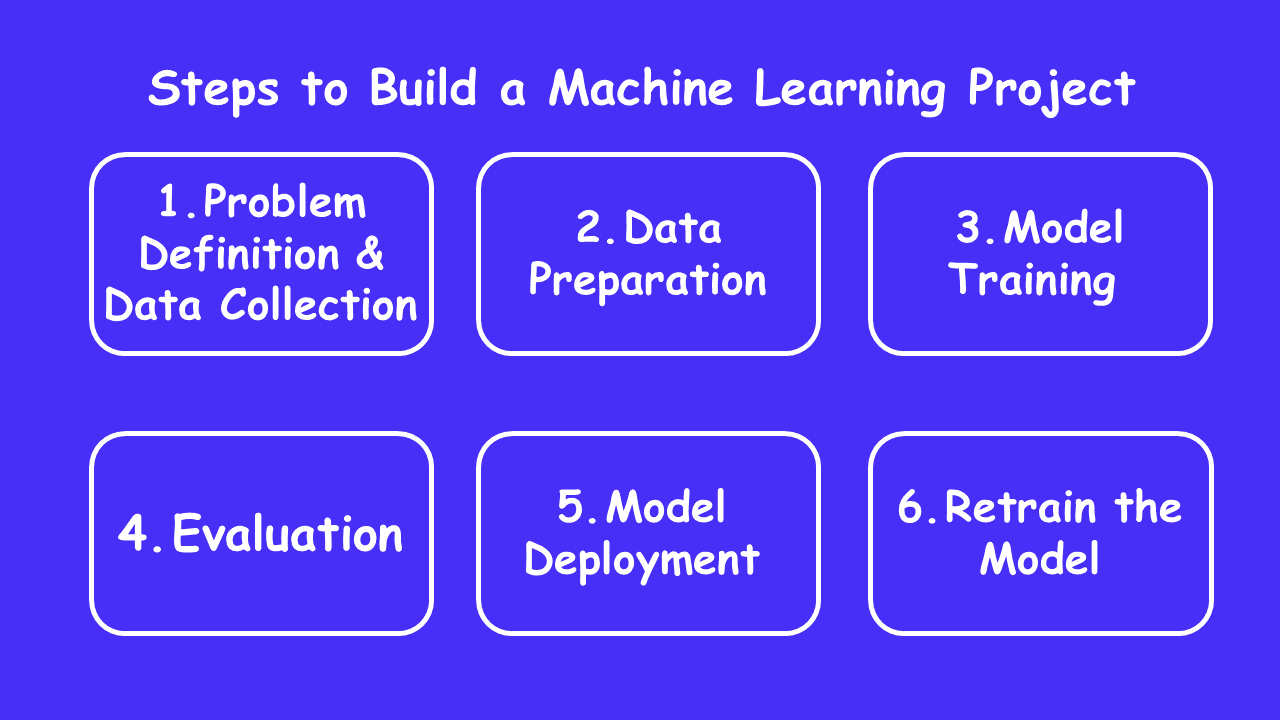

How Would You Develop A Machine Learning Project? Six Core Steps To Build Your First ML Project Are

- Problem Definition and Data Collection

- Data Preparation

- Model Training

- Analysis (Accuracy, Precision, and other measures)

- Model Deployment

- Retrain the Model

Let’s dive a little deeper into each step.

1. Problem Definition And Data Collection

To define a machine learning problem, you need to know these three types of machine learning and the problem that comes under each type

Problem Definition

1st Type: Supervised learning

Supervised learning, is called supervised because you have data and labels. A machine learning algorithm tries to learn what patterns in the data lead to the labels.

The supervised part happens during training. If the algorithm guesses a wrong label, it tries to correct itself.

For example, if you were trying to predict heart disease in a new patient. You may have the anonymized medical records of 100 patients as the data and whether or not they had heart disease as the label.

Problems with labels come under supervised learning

2nd Type: Unsupervised learning

Unsupervised learning is when you have data but no labels. The data could be the purchase history of your online video game store customers.

Using this data, you may want to group similar customers together so you can offer them specialized deals. You could use a machine learning algorithm to group your customers by purchase history.

3rd Type: Transfer learning

Transfer learning is when you take the information an existing machine learning model has learned and adjust it to your own problem.

For example, in training a classifier to predict whether an image contains food, you could use the knowledge it gained during training to recognize drinks

Now, you can identify the problem. So, Let’s move towards data collection

Data Collection

Find the data sources you will use in your ML Project. Now that you have data sources, it is time for data collection

Ask yourself: What type of data do you have? How does it match the problem definition? Is your data structured or unstructured? Static or streaming?

Different types of data you should know

Mainly two types of Data are there i) Structured Data ii) Unstructured Data

i) Structured Data: Data that appears in tabulated format (rows and columns style, like what you’d find in an Excel spreadsheet). Can contain different types of data, for example, numerical, categorical, and time series.

Different formats of structured data are

a. Nominal/categorical: One thing or another (mutually exclusive). For example, for car sales, color is a category. A car may be blue but not white. Order does not matter.

b. Numerical: Any continuous value where the difference between them matters. For example, when selling houses, $103,000 is more than $50,000

c. Ordinal: Data which has order but the distance between values is unknown. For example, questions such as, how would you rate your health from 1-10? 1 being poor, 10 being healthy.

You can answer 1, 2, 3, 4, 5, 6, 7, 8, 8, 9, or 10 but the distance between each value doesn’t necessarily mean an answer of 10 is 10 times as good as an answer of 1.

d. Time series: Data across time. For example, the historical sale values of Bulldozers from 2014-2020.

ii) Unstructured Data: Data with no rigid structure (images, video, natural language text, speech).

2. Data Preparation

There are three steps for Data Preparation

i. Exploratory data analysis (EDA) – Learning about the data you’re working with

ii. Data Preprocessing – Preparing data for model training

iii. Data Splitting – Splitting data into sets

Recommended Stories:

- Take A Look At This Updated Collection Of 100+ Downloadable Data Science, Deep Learning And Machine Learning Cheat Sheets: 100+ Cheat Sheets For Data Science, Machine Learning & Python

- Want To Learn About Machine Learning Algorithms With Python? If Yes, Then You Must Check Out This Post: Machine Learning Algorithms In Python

i. Steps for Exploratory data analysis (EDA)

1st Step – What are the feature variables (input) and the target variables (output)? : For example, for predicting heart disease, the feature variables may be a person’s age, weight, average heart rate, and level of physical activity.

And the target variable will be whether or not they have heart disease (note: this example has been very simplified).

2nd Step – What kind of data do you have? Structured, unstructured, categorical, numerical: Create a data dictionary for what each feature is.

For example, if you’ve got a column of numbers called “hr”, how would someone else know that actually means Heart Rate?

3rd Step – Are there missing values? Should you remove them or fill them with feature imputation (see below)?

4th Step – Where are the outliers? How many of them are there? Are they out by much (3+ standard deviations)? Why are they there?

5th Step – Are there questions you could ask a domain expert about the data? : For example, would a heart disease physician be able to shed some light on your heart disease dataset?

Find the answers to the above-given questions and implement the solution step by step as per your requirements.

ii. Data Preprocessing

After learning about the data you’re working with, it’s time to move towards Data Preprocessing: Preparing your data to be modeled. Data Preprocessing is divided into 6 main steps and sub-steps. So, Let’s dive into it

The first step of data preprocessing is Feature imputation

To fill in the missing values (a machine learning model can’t learn on data that isn’t there).

Types of Imputation you should know

a. Single imputation: Fill with the mean, and median of column.

b. Multiple imputation: Model other missing values and fill in what your model finds.

c. KNN (k-nearest neighbors): Fill data with a value from another example which is similar.

d. Many more, such as random imputation, last observation carried forward (for time series), moving window, most frequent.

The second step of Data Preprocessing is Feature encoding

Turning values into numbers (A machine learning model requires all values to be numerical)

Different types of Feature Encoding you should know

a. One Hot Encoding: Turn all unique values into lists of 0’s and 1’s where the target value is 1 and the rest are 0’s.

For example, with car colors green, red, and blue, a green car’s color feature would be represented as [1, 0, 0] and a red one would be [0, 1, 0].

b. Label Encoder: Turn labels into distinct numerical values. For example, if your target variables are different animals, such as a dog, cat, or bird, these could become 0, 1, or 2 respectively.

c. Embedding encoding: Learn a representation amongst all the different data points.

For example, a language model is a representation of how different words relate to each other. Embeddings are also becoming more widely available for structured (tabular) data).

The third step of Data Preprocessing is Feature normalization (scaling) or standardization

When your numerical variables are on different scales (e.g. number_of_bathrooms is between 1 and 6 and size_of_land between 7,000 and 19,000 sq. feet), some machine learning algorithms don’t perform very well. Scaling and standardization help to fix this

Feature scaling (also called normalization) shifts your values so they always appear between 0 and 1. This is done by subtracting the min value and dividing by the max minus the min.

For example, 1 and 4 bathrooms would become something like 0.3 and 0.6 respectively (between 0 and 1 but the numerical relationship is still there).

Feature standardization standardizes all values so they have a mean of 0 and unit variance. It happens by subtracting the mean and dividing it by the standard deviation of that particular feature.

Doing this means values do not end up between 0 and 1 (their range is uncapped). Standardization is more robust to outliers than feature scaling.

The fourth step of Data Preprocessing is Feature engineering

Transform data into (potentially) more meaningful representations by adding in domain knowledge.

Different ways to represent the data are

a. Decompose – Turn a date (such as 2021-03-31 18:00:36) into hour_of_day, day_of_week, day_of_month, is_holiday, etc.

b. Discretization – Turning larger groups into smaller groups

– For numerical variables, let’s say age, you may want to move it into buckets, such as, over_50 or under_50. Or, 21-30, 31-40, etc. This process is also known as binning (putting data into different ‘bins’).

– For categorical variables such as car color, this may mean combining colors such as ‘light green’, ‘dark green’ and ‘lime green’ into a single ‘green’ color

c. Combining two or more features – Difference between two features, such as using house_last_sold and current_date to get time_on_market.

d. Indicator features: Using other parts of the data to indicate something potentially significant

– Create an X_is_missing feature for wherever a column X contains a missing value.

– If you’re analyzing traffic sources from the web, you could add is_paid_traffic as a feature for advertisements that have been paid for.

– Does a particular sample satisfy more than 1 criterion? Such as if you were analyzing car sales data, does the car have less than 100,000 KM, is automatic, and is under 10 years old?

Perhaps you know from experience these cars are generally worth more. You could make a special feature called under_100km_auto_under_10yo.

The Fifth step of Data Preprocessing is Feature selection

Selecting the most valuable features of your dataset to model. Potentially reducing overfitting and training time (less overall data and less redundant data to train on) and improving accuracy.

Dimensionality reduction: A common dimensionality reduction method, PCA or Principal Component Analysis takes a larger number of dimensions (features) and use linear algebra to reduce them to less dimensions.

For example, say you have 10 numerical features, you could run PCA to reduce it down to 3.

Feature importance (post modeling). Fit a model to a set of data, then inspect which features were most important to the results, and remove the least important ones.

Wrapper methods such as genetic algorithms and recursive feature elimination involve creating large subsets of feature options and then removing the ones that don’t matter.

These have the potential to find a great set of features but can also require a large amount of computation time. TPot does this

Sixth Step of Data Preprocessing is Dealing with imbalances

Does your data have 1000 examples of one class but only 100 examples of another? What to do in such a situation?

- Collect more data (if you can)

- Use the scikit-learn-contrib imbalanced-learn package (a scikit-learn compatible Python library for dealing with imbalanced datasets)

- Use SMOTE (synthetic minority over-sampling technique) which creates synthetic samples of your minor class to try and level the playing field. You can access an implementation of SMOTE contained within the scikit-learn-contrib imbalanced-learn package

- A helpful (and technical) paper to look at is “Learning from Imbalanced Data”. You could use this as a starting point to go onto the next thing.

- Data Splitting

Your data is now prepared for modeling. But before training the model you’ve to split data into sets

Data Splitting

Different types of sets you should know

a. Training set (usually 70-80% of data): Model learns on this.

b. Validation set (typically 10-15% of data): Model hyperparameters are tuned on this

c. Test set (usually 10-15%): Model final performance is evaluated on this. If you’ve done it right, hopefully, the results of the test set give a good indication of how the model should perform in the real world. Do not use this dataset to tune the model.

Recommended Stories:

- Are You Looking For Open Source Machine Learning GitHub Projects And Repos? If Yes, Then You Must Check Out This Updated List: 100+ Best GitHub Repositories For Machine Learning

- Want To Learn Machine Learning In An Interesting Way? If Yes, Then Check Out This Rarely Seen Free Resources: Best Resources To Learn Machine Learning

3. Train The Model On The Data You’ve Prepared

Core Steps of training a model:

i. Choose an algorithm,

ii. Overfit the model,

iii. Reduce overfitting with regularization

i. Choosing an algorithm

Many of the algorithms you see here are already implemented for you in libraries such as Scikit-Learn. You always look to use a pre-implemented version of a machine learning model before building your own.

If in doubt, search something like “[ALGORITHM_NAME] scikit learn”. Every learning algorithm has: a loss function (how wrong the model is) an optimization criterion (a criteria for improving the loss, also called an optimizer, like stochastic gradient descent) an optimization routine that uses training data to find a solution for the optimization criterion (use a plethora of examples of what a model ‘should’ learn to improve what it ‘does’ learn)

Choose an algorithm as per your requirement and move toward the next phase i.e. fitting the model

ii. Overfitting and Underfitting the Model

There are two types of fitting in machine learning

a. Underfitting: This happens when your model doesn’t perform as well as you’d like on your data. Try training for longer or a more advanced model.

b. Overfitting: This happens when your validation loss (how your model is performing on the validation dataset, lower is better) starts to increase.

Or if you don’t have a validation set, what happens when the model performs far better on the training set than on the test set (e.g. 99% accuracy on the training set, 67% accuracy on the test set). Fix through various regularization techniques.

iii. Reducing Overfitting with Regularization

Regularization: a collection of techniques to prevent/reduce overfitting.

Steps in Regularization

1st Step – L1 (lasso) and L2 (ridge) regularization: L1 regularization sets unneeded feature coefficients to 0 (performs feature selection on which features are most essential and which aren’t, useful for model explainability). L2 contains a model’s features (won’t set them to 0).

2nd Step – Dropout: Randomly remove parts of your model so the rest of it has to become better.

3rd Step – Early stopping: Stop your model from training before the validation loss starts to increase too much or more generally, any other metric has stopped improving. Early stopping is usually implemented in the form of a model callback.

4th Step – Data augmentation: Manipulate your dataset in artificial ways to make it ‘harder to learn’. For example, if you’re dealing with images, randomly rotate, skew, flip, and adjust the height of your images. This makes your model have to learn similar patterns across different styles of the same image (harder). Note: since this can be compute-intensive, it’s a good idea to do this in memory. See functions like ImageDataGenerator in Keras or transforms in Torchvision.

5th Step – Batch normalization: standardize inputs (zero mean and normalize) as well as adding two parameters (beta, how much to offset the parameters for each layer, and epsilon to avoid division by zero) before they go into the next layer.

This often results in faster training speeds since the optimizer has fewer parameters to update. May be a replacement for dropout in some networks.

After completion of Overfitting and Underfitting, the next step will be hyperparameter tuning

Hyperparameter Tuning: Run a bunch of experiments with different model settings and see which works best.

Setting a learning rate (often the most important hyperparameter), generally, high learning rate = algorithm rapidly adapts to new data, low learning rate = algorithm adapts slower to new data (e.g. for transfer learning).

- Finding the optimal learning rate: train the model for a few hundred iterations starting with a very low learning rate (e.g. 10e-6) and slowly increasing it to a very large value.

- Learning rate scheduling (using Adam optimizer) involves decreasing the learning rate slowly as the model learns more (gets closer to convergence).

- Cyclic learning rate: dynamically change the learning rate up and down between some threshold and potentially speed up training

Other Hyperparameters you can tune

- Number of layers (deep learning networks)

- Batch size (how many examples of data your model sees at once). Use the largest batch size you can fit in your GPU memory. If in doubt, use batch size 32 (see Yann LeCunn’s Tweet and the paper attached of course).

- Number of trees (decision tree algorithms)

- Number of iterations (how many times the model goes through the data). Note: Instead of tuning iterations, use early-stopping instead.

- Many more… depend on the algorithm you’re using. Search “[aglorithm_name] hyperparameter tuning”

4. Analysis/Evaluation

Evaluation Metrics for Classification and regression Type of Problems

Classification Type Problems

Do you want to predict whether something is one thing or another? Such as whether a customer will churn or not churn? Or whether a patient has heart disease or not? Note, that there can be more than two things.

Two classes is called binary classification, and more than two classes are called multi-class classification. Multi-label is when an item can belong to more than one class.

For Classification type problems, Things you should pay attention to

a. False negatives — Model predicts negative, actually positive. In some cases, like email spam prediction, false negatives aren’t too much to worry about.

But if a self-driving car’s computer vision system predicts no pedestrian when there is one, this is not good.

b. False positives — Model predicts positive, actually negative. Predicting someone has heart disease when they don’t, might seem okay.

Better to be safe right? Not if it negatively affects the person’s lifestyle or sets them on a treatment plan they don’t need.

c. True negatives — Model predicts negative, actually negative. This is good.

d. True positives — Model predicts positive, actually positive. This is good.

e. Precision: What proportion of positive predictions were actually correct? A model that produces no false positives has a precision of 1.0.

f. Recall: What proportion of actual positives were predicted correctly? A model that produces no false negatives has a recall of 1.0.

g. F1 Score: A combination of precision and recall. The closer to 1.0, the better.

h. Receiver operating characteristic (ROC) curve & Area under the curve (AUC): The ROC curve is a plot comparing true positive and false positive rate.

The AUC metric is the area under the ROC curve. A model whose predictions are 100% wrong has an AUC of 0.0, and one whose predictions are 100% right has an AUC of 1.0.

Regression Type Problems

Do you want to predict a specific number of something? Such as how much a house will sell for. Or how many customers will visit your site next month?

For Regression, Things you should pay attention to

a. MSE (mean squared error): The average squared difference between the estimated values and the actual value

b. MAE (mean absolute error): The average difference between your model’s predictions and the actual numbers.

c. RMSE (root mean square error): The square root of the average of squared differences between your model’s predictions and the actual numbers.

Now, only two steps left…

5. Deploying a Model

Put the model into production and see how it goes. Evaluation metrics in vivo (in a notebook) are great but until it’s in production, you won’t know how it performs for real. Tools you can use to deploy your machine learning model

a. TensorFlow Serving (part of TFX, TensorFlow Extended)

b. PyTorch Serving (TorchServe)

c. Google AI Platform: make your model available as a REST API

d. Sagemaker

6. Retrain Your Machine learning Model

See how the model performs after serving (or prior to serving) based on various evaluation metrics and revisit the above steps as required (remember, machine learning is like try and learn, so this is where you’ll want to track your data and practice).

Hope this post on “how to start a machine learning project” and what are the steps to build your “first machine learning project” will be helpful to you.

If you think this article will be helpful for others, please spend your important 30 seconds helping others by sharing this article with them.