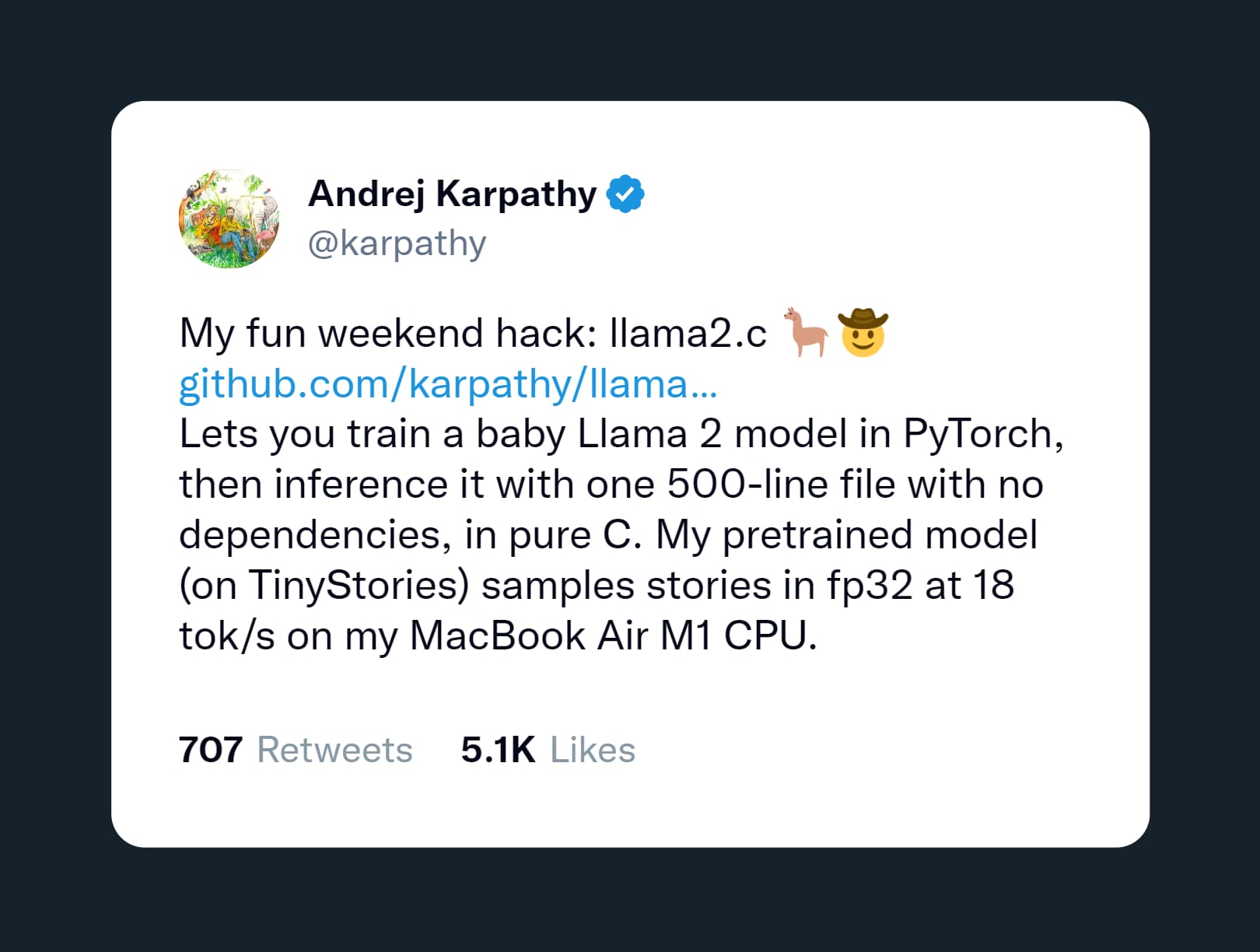

In a delightful twist, the brilliant mind behind OpenAI, Andrej Karpathy, known for his great contributions to deep learning, has taken a surprising turn. Instead of churning out GPT-5 as some might expect, he has immersed himself in a new weekend project, crafting a simplified version of the Llama 2 model called Baby Llama 2!

The endeavor began with Karpathy’s curious experiment to test the capabilities of open-source Llama 2 on a single computer. He skillfully tailored nanoGPT, transforming it to implement the Llama 2 architecture, all within the confines of the C programming language. The result is a repository on GitHub, already garnering 2.2K stars.

What makes Karpathy’s approach truly impressive is its ability to achieve highly interactive rates, even with modestly-sized models containing just a few million parameters.

For instance, he tested the Baby Llama 2 with around 15 million parameters on a TinyStories dataset, and on his M1 MacBook Air, the model could churn out approximately 100 tokens per second in fp32. This feat showcases the potential to run complex models on resource-constrained devices, thanks to his straightforward implementation.

The project’s progress left even Karpathy himself pleasantly surprised. The compilation on MacBook Air M1 was faster than he had anticipated, clocking an impressive 100 tokens per second.

Buoyed by this success, he has been actively updating the repository and has even begun experimenting with a 44 million parameter model, three times larger. Remarkably, he managed to train 200k iterations with a batch size of 32 on 4 A100 GPUs, all in about eight hours.

Read And Participate: Hackernews Thread On Baby Llama 2

Karpathy’s Baby Llama 2 approach draws inspiration from Georgi Gerganov’s llama.cpp project, which also involved using the first version of LLaMA on a MacBook using C and C++. His method entails training the Llama 2 LLM architecture from scratch using PyTorch and saving the model weights in a raw binary file.

The magic lies in the ingenious 500-line C file called ‘run.c,’ which loads the saved model and performs inferences using single-precision floating-point (fp32) calculations. This minimalist technique minimizes memory usage and eliminates the need for external libraries, enabling efficient execution on a single M1 laptop without relying on GPUs.

For those eager to dive into the wonders of the Baby Llama 2, Karpathy’s repository offers a pre-trained model checkpoint, along with code to compile and run the C code on your system. It’s a rare glimpse into the enchanting world of running a deep learning model in a minimalistic environment.

Of course, it’s essential to remember that Karpathy’s project remains a weekend experiment and not intended for production-grade deployment, a fact he acknowledges. Nevertheless, it serves as a powerful demonstration of the potential to run Llama 2 models on low-powered devices using pure C code, a language that wasn’t considered suitable for machine learning due to its lack of GPU integration.

As the trend for smaller models gains momentum, driven by the need to deploy them on smaller and local devices, Karpathy’s work stands as a testament to what is achievable on a single device.

Meta’s partnership with Microsoft opens up the possibility of releasing a collection of tiny LLMs based on Llama 2, while Qualcomm’s collaboration with Meta aims to make Llama 2 run seamlessly on local hardware.

Apple, too, is actively optimizing its Transformers architecture, tailored for Apple Silicon. In this exciting landscape, Karpathy’s inspiring project proves that the domain of possibilities is limitless.

Related Stories: