Stability AI recently unveiled the weights for Stable Diffusion 3 Medium, an AI model designed to convert text prompts into AI-generated images.

However, its release has received criticism online due to its ability to produce human images that appear to be a regression from other advanced image-synthesis models such as Midjourney or DALL-E 3. Consequently, it is capable of effortlessly generating visually disturbing and anatomically inaccurate images.

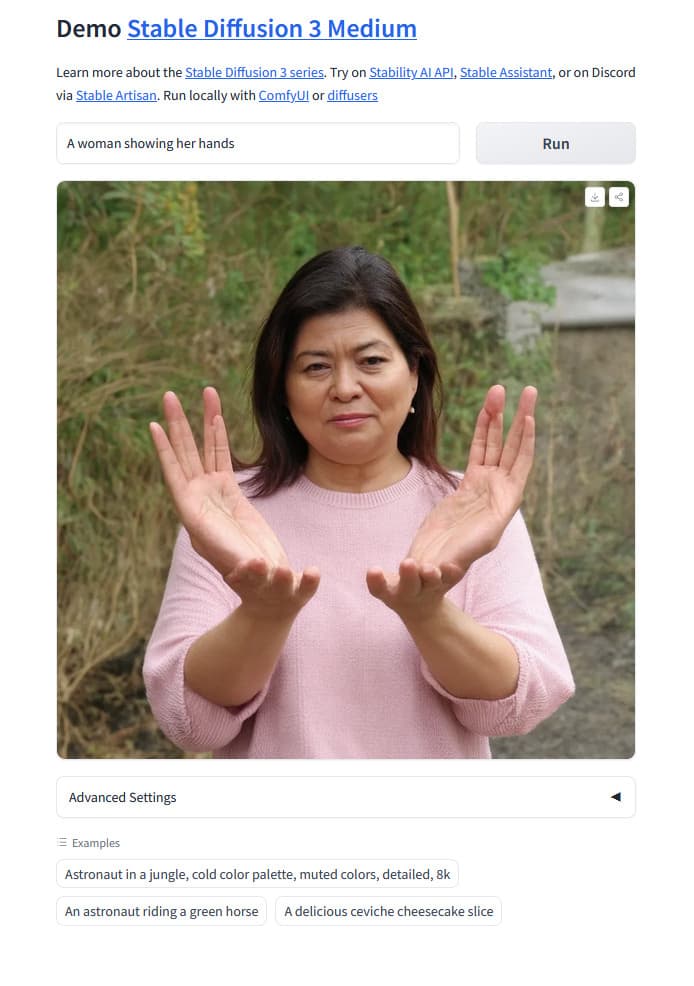

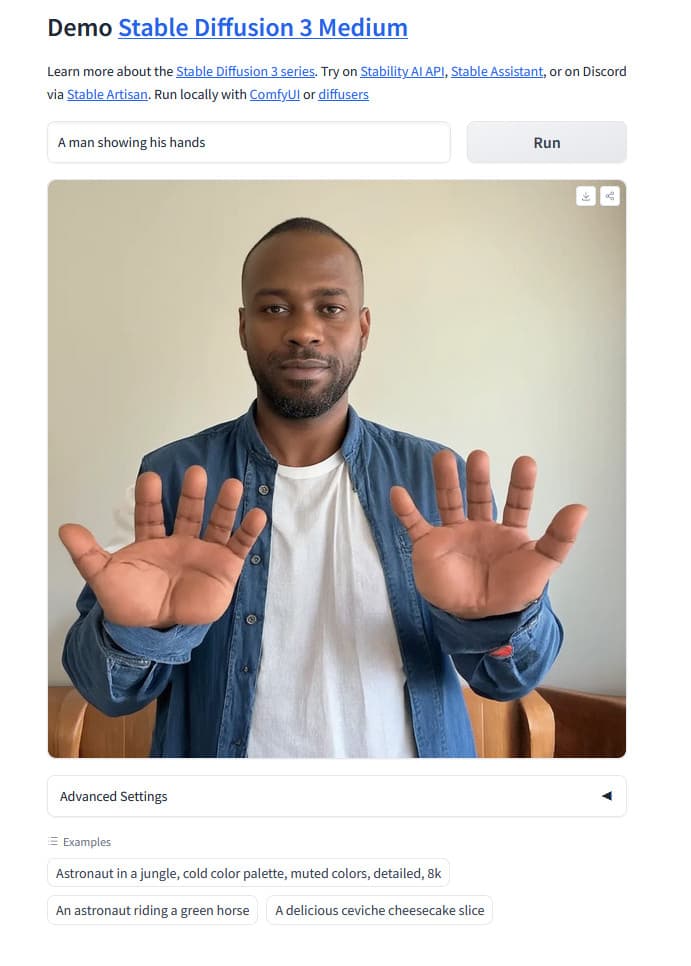

On Reddit, there is a discussion about the disappointing performance of SD3 Medium in rendering human figures, particularly hands and feet.

Another thread focuses on the struggles of SD3 in generating images of girls lying on grass, highlighting the overall difficulty in creating accurate human body representations.

While AI image generators have historically faced challenges with rendering hands, recent models have made significant progress in overcoming this issue.

Therefore, the underperformance of SD3 comes as a setback for the community of image-synthesis enthusiasts on Reddit, especially when compared to the more successful SD XL Turbo release in November.

Some of the pics generated using Stable Diffusion 3

“Not too long ago, StableDiffusion was in competition with Midjourney, but now it seems to pale in comparison. At least we can be confident that our datasets are secure and ethical,” commented a Reddit user.

AI image enthusiasts are currently attributing the shortcomings of Stable Diffusion 3’s anatomy to Stability’s decision to filter out adult content, often referred to as “NSFW” content, from the training data.

One Reddit user commented, “It’s hard to believe, but heavy censorship of a model can also result in the removal of human anatomy, so… that’s what happened.”

Essentially, when a user’s prompt focuses on a concept that is not well-represented in the AI model’s training dataset, the image-synthesis model will generate its best interpretation of the user’s request. This can sometimes be quite unsettling.

In 2022, the release of Stable Diffusion 2.0 encountered challenges in accurately depicting humans, as AI researchers found that censoring adult content containing n*dity could hinder the AI model’s ability to generate realistic human anatomy.

To address this, Stability AI reversed its approach with SD 2.1 and SD XL, regaining some lost capabilities by reducing the strong filtering of NSFW content.

Another issue that can arise during model pre-training is the overly strict NSFW filter used by researchers, which may unintentionally remove non-offensive images and limit the model’s exposure to depictions of humans in various scenarios.

Some users have reported that “[SD3] works fine as long as there are no humans in the picture, I think their improved nsfw filter for filtering training data decided anything humanoid is nsfw.”

When testing a free online demo of SD3 on Hugging Face, similar results were observed to those reported by others. For instance, the prompt “a man showing his hands” resulted in an image of a man holding up two unusually large backward hands, each with at least five fingers.

Some fans of Stable Diffusion believe that the issues with Stable Diffusion 3 Medium reflect the company’s mismanagement and indicate a decline.

While the company has not declared bankruptcy, some users have made grim jokes about the potential for it after encountering SD3 Medium.

Related Stories: